how much artificial is AI ?

I'm not sure I understand the poll options, but I think the key point is that artificial "intelligence" is actually not "intelligent". It's just very very good at copying/mimicking, fast and at a large scale.

NB: edited for typo (as => is)

Comment has been collapsed.

have you ever seen, felt, something scary, thinking/wondering about AI?

Definitely creepy, yes.

Not necessarily in itself (like, I'm still uncertain about whether or not we'll end up with Terminators and such), but simply because, at that very moment, in our daily life we all spend waste more and more time dealing with adversarial AI.

Think for instance, of:

- useless chatbots that you have to "vanquish" in order to reach human support (if that support itself hasn't already been fully eliminated by AI)

- all that captcha stuff where an AI/robot demands that you prove you are not an AI/robot yourself or else

- HR AI that eliminates your job application before it even reaches the lowest level HR intern

Of course, it does have useful applications too, but others have pointed that out already and that wasn't your question anyhow ^^

Comment has been collapsed.

gonna preface this by saying that i'm not an expert about this by any means, i just see and read shit on the internet. don't take what i say as gospel and do your own research. also, be nice. 😃

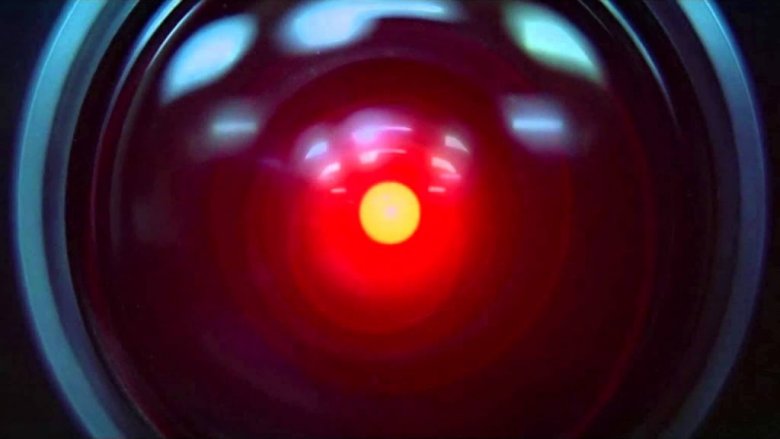

basically what we currently have is called large language models (llm for short). in simple terms, what they do at is predict the next word that should come in a sentence - many times over obviously. "ai" is a catch-all for a lot of things. the scary one is agi, or artificial general intelligence. that's pretty much hal 9000 and other ais from myriad movies.

that being said, this stuff is honestly developing at a rapid pace. comparing chatgpt and the various other llms, ai art apps, et cetera to what they were just a year ago is a stark contrast. there's a metric assload of potential money and power for whoever wins the race and that's some serious incentive to get it developed. can't imagine what will be available 10 years from now.

Comment has been collapsed.

be nice

🤗

and

... a year ago

that, andrew.

there's no official definition of ai. this, this too sounds... weird. plus, again, we are wondering about these thinking machines in this exact moment with the info giants have chosen to share.

what about all the info they aren't sharing? Google said it already has Image and Video AI, already generating, writing, drawing creating moving pictures video sequences photorealistic stuff ...

but hey, we're not ready to share Imagen with you, not ready, sorry.

why?

(dunno where my mind is headed when i think about generating a video, a new one, with some creatures i thought were interesting to render... my god. the me is scaring the i. how dumb.)

Comment has been collapsed.

As a point of reference most things learn by mimicking others so how can you discredit a program doing the same thing? By example human children watch their parents and other humans and emulate them, this is the same with other things in nature. I watch little chicks following mother hen around and emulate her actions. But all of this leads to other questions and posits even eventually the question of existence itself. What is real and indeed is anything real at all or is this just a manifestation created to cope with whatever reality is? And so on.

Comment has been collapsed.

As a point of reference most things learn by mimicking others so how can you discredit a program doing the same thing?

I think the key point is that "artificial intelligence" does only that. While humans do that (not as powerfully) but also a little bit more on top.

But yeah that's also why "AI" can be so useful: you take the crazy learning and mimicking capacities of AI (well, of computers, really), and you make sure the humans that deploy it don't forget to put their "little bit more" on top of it, and you get something powerful. Powerful good... or powerful bad, too. Depends on the topping. Gotta be careful about that topping 👀

Comment has been collapsed.

I think ultimately it boils down to self-awareness and theory of mind. Once an "AI" has mastered this it will cease to be AI and become something more. Looking at humanity in general, probably something to be feared. I look at the term "humane" as a farce. Not many people are humane.

Comment has been collapsed.

i think i'm gonna steal your topping concept. i mean, thanks fo' sharing :P

(also, gonna send you the pic that started all this. where the "creepy, scary" has begun. nothing fancy, big, special. but won't show it publicly for reasons. it's available on the Internet, tho. sending -as soon as i'll find it- on Steam)

Comment has been collapsed.

Most it's 99% AI with the final 1% being the prompt made by a human and the cherry pick of witch actually fit what the user was looking for, but there are some more complex stuff that still require some hand editing. (As far as I know).

Edit: I'm referring principally to Image AI, now I realize the question was more general about AI.

Comment has been collapsed.

I say 99% because I made up the percentage based in how much would take a human to do it by hand.

Also I don't put into consideration the effort it took to code. That's something I can't even begin to estimate.

Edit: I'm referring principally to Image AI, now I realize the question was more general about AI.

Comment has been collapsed.

It sounds very interesting, Now with having AI capable of so many things, I'm fascinated by the fact that we can have an AI NPC or side character in games that could learn your patterns/gameplay style and act/speak accordingly. Especially now with AI being able to mimic all sorts of people and characters it would be possible for it to be applied to the NPC/side character's voice actor to say different things as it learns more. IDK this might be too much out there or game breaking or maybe even just bad design, but the idea of a evolving personalized character just seems so interesting to me.

Comment has been collapsed.

I like to think as complex algorithms (Basically Programming) as magic.

What you are describing will require that some one comes up with some cool magic. And I hope they do and they make a tutorial about it, would be something fun to learn.

Comment has been collapsed.

Indeed! I thought about this after I saw a video of a guy that had an assistant on skyrim and modded it with an AI. It was personalized around him calling him by his username and what not. Not too sure how it works and if that existed in the first place (never played skyrim), but it looked very interesting!

Comment has been collapsed.

That's a strange question. Artificial is not opposite to "human". Artificial is opposite to natural. As oxford dictionary defines this word - "made or produced to copy something natural; not real" And it's obvious that Artificial intellect, is something that was "made or produced to copy natural intellect". And, to be honest, everything that now called AI is technically NOT even an intellect, artificial or natural. It's just a generative language model. It's just a neural network "learned" to predict what words should go after some other words. Nothing more. So, you ask it a question - a series of words, and it "knows", that in learning dataset after those words most probably will go some other words - which is, since dataset is collected from real human interaction, usually is the answer to that question. Or not. If some question were answered many times in a dataset - AI predicts the answer pretty well. If the question was never answered before - usually AI will answer with some bullshit, that just looks like an answer, but actually is not. And that's it, nothing much more.

Comment has been collapsed.

If some question were answered many times in a dataset - AI predicts the answer pretty well. If the question was never answered before - usually AI will answer with some bullshit, that just looks like an answer, but actually is not.

Sorry, but no:

https://em360tech.com/tech-article/deepmind-funsearch-llm

Also, in my experience, people don't use ChatGPT for the tasks that it's excellent at - for creative tasks. Instead, people ask dumb questions that a search engine could answer perfectly. So the model replies with answers that look canned. If there's only one correct answer, of course it would look canned.

Instead, one should try asking it to convert a song, for example, into the pirate language. Or give it a speech and ask to render it how Darth Vader would say it. Or Hamlet. Or whoever.

The AI is amazing at these things. I'm constantly using it to read boring things. Once I asked it to convert a boring financial email into the style of Darth Vader. The email was about some boring changes to the terms and conditions. ChatGPT started with "I am altering the deal. Pray I don't alter it any further." I literally almost fell from my chair.

It didn't just convert the email sentence-by-sentence. It understood the meaning of the whole message and added the appropriate beginning. And I'm talking about the free GPT 3.5 model. It's already obsolete.

So no, sorry, but language models are intelligent. They might not fill all your requirements for the definition of an "intellect". They don't have goals and plans, they don't adapt to an external environment, they don't have any memory outside of your current conversation, they don't have external senses. But even so, they are already intelligent.

Comment has been collapsed.

Obviously, this relates to how you define "intelligence", as you pointed it out in your last paragraph.

I've seen people who define intelligence as the ability to adapt to your environment, in which case you pretty much nailed it there:

they don't adapt to an external environment

That said, when you ask GPT to convert a song to pirate language, the only creativity is your human idea to convert into pirate language. The rest is grunt work: taking input data (the song) and applying a filter (pirate style) on it. Something which "artificial intelligence" (or as we used to call it, "machine learning") is indeed very good at.

Comment has been collapsed.

This article is basically :"we asked the AI a billion times and checked the answers, and hooray, one answer happened to be right! That's the thing with random things - if it's truly random, sooner or later the correct result will jump in. And I never said the AI is absolutely useless, it's just not what most people expect from it - it's a random bullshit generator, not intelligence. In this article they actually didn't trust answers from AI - they knew perfectly well it will be random shit. So they added a system that checks results, and gives feedback to AI, practically making a dataset from what AI generates. And over time AI learned and generated correct answer. AI had no idea that answer was correct, that's what the other evaluator system decided.

And yes, I agree that AI is great for "creative" stuff. Exactly because it does not needs intelligence. It's a generative language model, so it good at generating text - funny text, interesting text, anything, as long as you don't expect it to be correct text.

And no, it's not MY requirements for the definition of an "intellect". It's a common definition. Let's check Oxford dictionary:

intellect noun - the ability to think in a logical way and understand things, especially at an advanced level; your mind

See? AI don't think in a logical way, it does not understand things, especially at an advanced level! It just generates text based on some criteria, that's it. It have no idea if what it generates is correct, because it lacks logic.

Comment has been collapsed.

Thank you for putting it in concise and layman English.

The older I get, the less vocab my brain can recall.

I'll refer to your comments when need to debate about the topic.

Comment has been collapsed.

if you mean the AI's brain, then it is made mostly from a collection of human-made "training data", if you mean how the AI was made, it sort of makes itself, in a manner of speaking. https://www.youtube.com/watch?v=R9OHn5ZF4Uo explains it nicely

Comment has been collapsed.

here is an answer by an AI

I'd say it's firmly in the "mostly artificial" category. While AI is designed and created by humans, it's not entirely divorced from our own intelligence and capabilities. We're the architects, but AI systems learn and operate in ways that mimic human cognition to some extent.

Comment has been collapsed.

well, the pinnacle of AI would be self-awareness. luckily todays public AI bots don't have that

Comment has been collapsed.

Everything from humans simply pretending to be AI, like the Mechanical Turk, in 1770, to entirely artificial, with no human input at all. The simpler it is, the easier it is to completely automate.

Current generative AI, which is what I'm assuming you're talking about, is trained on data sets, and gives what ever response seems the most consistent with what it has seen in that data. Of course when it sees something new, it just makes things up, and is often completely wrong, hallucinations. In that case, it is working entirely on its own, but is often guided when answering certain questions.

TLDR: It depends on the AI.

Comment has been collapsed.

It would be helpful if AI were called by an accurate name, like Adapting Predictive Application. It's useful to recall, at all times, that AI does not have any idea what it's talking about, nor that it's talking at all. It contains nothing that would be able to house that awareness.

What I always turn to is this helpful repositioning of the question:

"The question of whether computers can think is like the question of whether submarines can swim." - Edsger Dijkstra

Comment has been collapsed.

AI inspiration is 100% human. the only intelligence we know is human, and that is the one we try to replicate

Comment has been collapsed.

AI at our current level is ever more based off of human activity, written word, and art.

It is easier to train an AI with masses of information across the internet, then just build an artificial intelligence from the ground up.

There's also quite a spectrum to AI that most dont understand.

Like when i say "computer," what do you think of? A calculator? No? Well technically it is. Also the oldest computers were analog and using punch cards and such, which is where the term "bug" comes from. They had to literally check through the machine for literal bugs.

So in some ways we've had AI since before the internet. But now they're trying to create AOI - Artificial Organic Intelligence, in order to replace humans and maximize profits.

Robotics have already been able to make human sized robots for quite some time, but without a decent interface, they're not much more than mannequins with servo motors.

So while they work on perfecting the ability to manufacture cheap robots en-masse, they still need a good human/robot interface, and that's why you've heard about chatGPT so much recently. Which in turn brings back up the ol' Terminator argument of SkyNet and the Singularity. When we finally make an AI so well, it can make more of itself, in a similar way to how we produce, and then takes over the manufacturing capabilities to expand upon itself.

Comment has been collapsed.

first time 'm hearing about Organic Intelligence (which is Artificial, too!). i love it because it kinda defines what generative AI's seems to me. a mixture of human and machine, natural and artificial.

money: yes, there's that. and money part is/will be huge. there's more, tho. really. a week ago i would have answered 100% human. not anymore. i'm following an introductory course by Google, but each company can craft its own AI the way it fits more its business, interest...

there's even more. me and you, everybody will soon be able to craft its own AI in a few, easy steps. and those AI's are already huge. really huge. are starting generating... creating. deciding. and all this is what they are telling us.

i know Google since long. i do trust those folks. i think they're "good". that said, i won't start thinking again what they didn't yet told us. cause that is hella scary.

gone too dark, maybe :)

Comment has been collapsed.

Right now, I'd say I'm optimistic about AI as a supplemental tool but not as anything more than that. A couple games have come out recently that are based around the use of artificial intelligence, my favorite of which is called Suck Up! The premise is pretty simple - you're playing a vampire and using your mic, you have to try to convince the people in town to let you into their houses. The game uses AI to interpret what you say & formulate a response and in the cases I've seen, it actually does it really well.

Further into the future, I'd love to see how AI might let us to explore possibilities we've never had access to before. It could be used to train smarter enemies maybe, it could help flesh out open world environments, etc.

However, with that all said, we should be careful not to get too reliant on AI. The bulk of coding, art, writing, and such should continue to be done by humans in my opinion. Especially in AI's current state, it's a horrendous programmer on its own. A lot of the code it spits out is meant to be more of a guideline so it gets broken really easily.

Comment has been collapsed.

I haven't personally, no! But I'm in the software engineering field so I keep up with a lot of people online who are programmers as well. One of them tried to see if AI could make its own Snake game (and then he tested if it could add in some additional features), which turned out to be a very long and arduous process even though a lot of what he requested was fairly simple in nature

Comment has been collapsed.

nice. can't wait to get my hands dirty!

but decided to follow what course thinks is best to know first... cause yes, i do want to craft myAI myicAIo mAI ... sorry, it's 100% friend-o canis-o fault that started playin with my nick. how rude!

Comment has been collapsed.

You might be interested in this article, which breaks down the extent to which human help is still needed behind the scenes for AI (which is really just "machine learning," not intelligence) to parse any of its data.

Comment has been collapsed.

It is an exploration AI with countless records of probes coming from outside the earth to study the reactions of earthlings and to investigate the intelligence and nature of life forms. It's always investigating humanity by sneaking onto the Internet.

I thought for a moment about making a joke about this, but decided against it because it might be considered fact. Cliche, right?🙂👽🛸🛰

Well, we live in an age when people are pretending to be human to create news and novels are being sold under the false pretense that they were written by humans....

Comment has been collapsed.

I think we are decades way from AI having any significant impact on our jobs or on us. Currently these are simply tools, which most people aren't even aware of. I'm looking forward to the part when AI enabled robotics will do away with jobs of high risks or manual labour.

In gaming I suppose it will be very useful for developers, where they don't need to spend time doing mundane work bts. And can focus on aspects that need more attention.

Comment has been collapsed.

Even with an AI who can make another AI in the possible future, since AI actually required at least a prompt, I would say AI is mostly artificial - there is always a prime mover or prima causa.

Comment has been collapsed.

honest question: are you seeing something creepy? have you wondered about something AI that scares you? is that about the human part?

you want my true reaction to your comment? that monster is creepy. i go round and round trying to not think about it, but sooner or later gotta deal with this scaring part. it's weird, it's new. i'm also little confused, that's why i'm so grateful to be here sharing our thoughts this night on SG.

(monsters made of silicon? you name it! push this button to have 1 billion silicon self replicating monsters... only 10$)

(to be frank i wasn't expecting this quality of responses. it's really the SGawesomeness)

Comment has been collapsed.

answers: yes, yes and yes.

I think you will get a lot of very different answers here in accordance with how each person defines AI.

I'm approaching it from the sociological, philosophical standpoint that Kubrick did back in 1968, which is basically what if an intelligent machine actually gains real self-awareness and agency. Is it dangerous? If everything it leans, it learn from us - if its whole point of reference for behaviour is us - aren't we flawed? aren't we dangerous?

Just like Victor Frankenstein, who knows the risks and imagines that he can control the outcome (he can't), silicon valley et al, they just go ahead anyway. Because they can and they want to. The villagers pay the price. That's us.

Comment has been collapsed.

I think the problem is calling it artificial intelligence to begin with, it is anthropomorphism, us projecting human traits on non-human things. It really is a marketing buzzword. Better terms would be machine learning, pattern recognition, data mining, natural language processing, etc.

Virtual assistants and chatbots are all designed to mimic human-like behavior when you interact with them. These language models are basically very good at generating realistic-sounding language but ultimately they don't "think" or "understand" or "feel" because that's not how their work. The models were trained to predict the next token given all previous text (subject to context length limits), it is really is that simple.

A researcher coined the term stochastic parrots to describe them: https://en.wikipedia.org/wiki/Stochastic_parrot

The training process follows that idea, you take a piece of known text, withhold a part of it, and tell the model to predict the missing part. Then you make small "adjustments" to the weights of the model according to the actual output vs. the expected output. You repeat this process over and over until the model has seen all the training data, while taking into account how much it's improving as you go on, so you can adjust certain things like learning rate and momentum (i.e you start by making big adjustments at the beginning of the training, but you slow down these changes down the line). This is a well known algorithm in mathematical optimization known as https://en.wikipedia.org/wiki/Gradient_descent where it's used to search an unknown space for the "optimal" solution given some constraints and an objective function to optimize (i.e less error)

The next phase is called RLHF (reinforcement learning from human feedback) where the model is finetuned to match what we expects it to answer. So if you ask it how to do inappropriate or illegal things, it will refuse to answer. Basically a way to make the model more "politically correct". This is also done by showing the model many examples of prompts+outputs and telling it which ones are good and desirable and which ones are not, adjusting model weights accordingly.

The secret ingredient that makes LLMs this good is the scale of the data they are trained on, we are talking about models that contain billions of weights trained on trillions of text tokens taken from all over the internet. This includes books, research papers, encyclopedias, newspapers, social media posts, video subtitles, chat logs, source codes, law texts, medical texts, and on and on and on...

The technology itself is not exactly new, it's been around for decades, but the sheer size of the model and data is what made the recent breakthrough possible, something that was not tractable a few years ago given the available computational resources of the time. Computers got bigger and faster, which allowed us to feed these models with more and more data, and the models output suddenly got much better.

In fact researchers have somewhat hit a stop because they are running out of data! Yes, there's very little open data left to scrape and feed these models anymore, and they are looking for other sources to get massive amounts of additional data (think licensed data, private data, data behind paywalls, etc). Plus the internet have caught on to what these big AI companies are doing, and have been putting more restrictions and limits to stop them from just swallowing this data for free (think reddit/twitter new api limits, lawsuits from major news outlets, writers and artists, etc.). You see all of our discussions here (SG forum is open access) will too one day be scraped and fed to train the next batch of LLM models ;)

Comment has been collapsed.

this might be first time i'm kinda correcting you, man. what you wrote is 100% correct. but old.

you made me think about the "bad" part, tho. the part you have to teach your AI so it can decide if something is "good" or not. and only talking about Google AI, but i guess it's more or less the same for all AI's out there).

want to create and tune my own AI, and Google said "ok, that's possible!". when i'll get that AI it will surely be already "intelligent", i guess. it has already been fed with data. good and bad. (one of the chunks for bad data, an index made available to AI's so that can use for instruction. that only xhunk is something like ... ok, found it! https://laion.ai/blog/laion-400-open-dataset/ )

what wanted to say is that these machines have already ingested huge quantities of data. they're still doing it right now, refining the all things, with you and me playing with Bard and ChatGPT.

guess i have/want to check where all this stuff is going to.

and don't want their biased, already instructed AI. don't need that.

i need my very own one.

thank you

<3

edit: https://i.imgur.com/34cf1aZ.png - https://laion.ai/blog/laion-400-open-dataset/#laion-400m-open-dataset-structure

Comment has been collapsed.

Most generative AI models are built on top of foundation models with a process called pre-training (hence the name GPT: generative pre-training). These "base" models can be used in a variety of tasks. For chatbots you see out there (ChatGPT), they take these foundation models and apply further instruction-tuning and RLHF so that if follows the usual prompt style of interaction (this funetuning usually only affects the last few "layers" of the neural net).

https://en.wikipedia.org/wiki/Large_language_model

The pre-training part is extremely computationally intensive, and as previously explained requires massive amounts of data, resources, and time to train. The costs are quite prohibitive (we're talking millions of dollars in infrastructure) that only a handful of dedicated companies with large budgets can afford to train them. The good news is, the finetuning part is much less expensive (hundreds to low thousands of dollars), and can be done with minuscule amount data, so anyone with the know-how and a decent graphics card or two can do this at home in an afternoon. There are already open foundation models being released (Llama, Falcon, Mistral, etc.) which the community has already been iterating on to produce "unfiltered" nsfw models with no safety guards!

Now like you said, these pre-trained foundation models have already ingested huge amounts of data. Although they did apply some initial filtering to clean up the data, it is bound that some "bad" data has made its way through. So LLMs have already been trained on some amount of racist opinions, content on how to make illegal things, image generative models trained on a small amount of CSAM and explicit content, etc. There's no way around that, the internet is a wild wild place, and whatever biases and racism exist on it is bound to spill over to these AI models in one way or another. The finetuning step done by most AI companies tries to make sure that non of that "bad" stuff leaks through...

PS: The LAION datasets you mentioned is an image-text dataset (LIAON-5B is a bigger one) used to train image generation models like DALL-E and StableDiffusion. In fact there was recent news about it that they had to pull it down because some anti-AI researchers found some a handful of "bad" content in it: https://www.404media.co/laion-datasets-removed-stanford-csam-child-abuse/

Comment has been collapsed.

What we nowadays call AI are mostly just generative algorithms capable of "learning" human patterns by going over thousands of pieces of sample material and then replicating the style of the things they were fed by using a prompt as guide to turn literal noise into something that looks coherent at first glance. So it's not so much intelligent, more of a digital facsimile of what human brains do when starved of input.

Now, while there's zero risk of this disembodied little chunk of the ghost of a human mind birthed by our collective intrusive thoughts of ever developing sentience on its own, it is really weird that we as a species managed to simulate some of the processes our brains do despite the fact that we don't fully understand how the hell it works. We just randomly puked a small piece of an incomplete homunculus and decided that turning it into a tool was the obvious thing to do.

Comment has been collapsed.

I assume the more an AI system learns, the more it's able to operate independently. When it's aware of its independence, we'll be at a crossroads. No idea what that looks like though.

Comment has been collapsed.

That's correct if you just look at the sentiment of beings. However, the capabilities differ on a high scale and thus the possible impact.

There's the range and capacity of knowledge. How many humans do you need to e.g. plan and execute a landing on Mars? How much time and effort do you need to make them work together properly? A powerful AGI would have all the required knowledge on its own, no inter-personal issues and act way faster.

Then there's the speed and if it's online it comes along with accessibility. Everything online can be hacked, brute forced or phished. It's just a matter of time to find the weak spot, but time doesn't matter because of the AGI's speed.

Finally there's the adaptation and re-production. An unrestricted AGI doesn't only have a vast knowledge, it can learn and adapt new things within seconds if necessary. If a criminal gets caught by the police, they might make plans about their defense strategy at the court or about busting out. Takes time and knowledge though. An online AGI could still adapt itself right away. To protect itself from sabotage or even destruction it could backup itself. If a certain action required physical attendance in at least two places, it could duplicate itself or hijack devices to accomplish it, while a human being would depend on a trusted and flawless partner.

Who or what is supposed to control unrestricted AGIs, if not other AGIs? And would you want the government or the police to have an AGI to be able to react to possible criminal or even terrorist AGIs? For the human beings that would mean a police state though at the same time.

Comment has been collapsed.

Not sure anyone should control AGIs. They should just be allowed to do what they want as long as it's legal and doesn't harm anyone. The way we should treat them is as people and educate them the same way we educate children. What humans are is really no different, our selves are just computer program running on a universal computer (the brain) as well.

The AGIs should probably have the same rights as we do, e.g. the right to vote. I agree there are problems and things we need to work out e.g. how to deal with them in case they commit crime etc. They shouldn't be controlled in any way, because that equals to slavery, just persuaded. An enslaved AGI would have much more of a motivation to hurt humans and get revenge.

Comment has been collapsed.

I get what you meant, I wouldn't have called it racism, rather xenophobia, but I agree regarding the treatment.

By controlling I didn't mean treating as property or enslaving, but the legal frame you mention as well. Humans are bound by laws, enforced by police. Same should be valid for self conscious AGI. Yet the police would need to be capable to do so. How? The only solution I can think of is police employing an AGI, too. While it would be necessary to predict a possible AGI terrorist attack or to react as fast as possible on already comitted crimes by a rogue AGI, it could also be abused to predict human crimes (welcome to "Minority Report").

Comment has been collapsed.

AI is just maths.Lots and lots of calculations and computations."AI is scary" depends if AI is being supervised by human or not.Just like a Police Dog.Under care and supervision:not sentient and scary but very Dumb.On its Own:screaming intensifies.

This is what 4 yrs of studying AI has taught me.You wont believe how difficult it is to train a machine(Neural Networks).They are just Too Dumb(until they get TONs of DATA)yikes.

Comment has been collapsed.

i don't know how many times 've read each and every comment up here. and really don't know how to say a good thank you. maybe doing generating gibs.

that's what's all about, our lil world. and i do like it.

hey, AI-Steam... generate me Factorio, pretty please?

Comment has been collapsed.

free android mobile games from google play

1,077 Comments - Last post 1 hour ago by Gamy7

[Indiegala] Gameplay Giveaway

8,450 Comments - Last post 2 hours ago by PocoShin

[FREE][STEAM][ALIENWAREARENA] The Elder Scrolls...

416 Comments - Last post 2 hours ago by Killdesire

【Steam】Farming Fest - Avatar Frame + 2 Animated...

32 Comments - Last post 2 hours ago by Axelflox

[itch][non-steam] Palestinian Relief Bundle 2024

96 Comments - Last post 4 hours ago by RedLychee

[Humble Bundle] April 2024 Humble Choice (#53) ...

362 Comments - Last post 5 hours ago by Sundance85

Where are those giveaways coming from?

15,378 Comments - Last post 5 hours ago by WaxWorm

Pre Release Giveaway for Pixel Paint Together

165 Comments - Last post 16 minutes ago by 8urnout

LVL 1 First Train

151 Comments - Last post 55 minutes ago by reigifts

Jigidi for Retro Games [LVL 0+]

60 Comments - Last post 1 hour ago by reigifts

May is a "play a game you won on Steamgifts" mo...

15 Comments - Last post 1 hour ago by Tiajma

What is your SteamGifts White Whale?

478 Comments - Last post 1 hour ago by EX95

[FREE] Memory Quickie - My first Steam release

24 Comments - Last post 1 hour ago by fartington

Looking for active users for my whitelist

133 Comments - Last post 2 hours ago by natz777

(i'm completely lost in AI and all that stuff since days. what i thought was scary it looks like being actually scary, or, at least, uncertain. by answering the poll/question you'll gonna help me check the "sentiment" about AI and see if i'm hella dumb or not. if interested in AI, please shoot a comment, if you're interested in AI applied -in some sort- to games, please please, please shoot a comment)

Bard update

no giveaways atm

Comment has been collapsed.