Miners boom is over for now. Nvidia still thought they will earn 100 mln $ this quater on them, but in fact earned only 18 mln $. And Etherneum is down from 1300 to 280$.

https://www.marketwatch.com/story/nvidia-says-crypto-mining-boom-is-over-for-now-2018-08-16

Comment has been collapsed.

Yea no, fuck those prices. An 2070 is probably gonna end up being over 700 euro monies over here. I am now 100% sure I am skipping this generation as well. See ya in another 2 years.

Comment has been collapsed.

They will sell it as "kinda ray-tracing ready". So prices will be inflated, esp when they didn't allow prices of previous GPUs to drop, by bundling them with like SSD drives "2 in 1" kind of deal, instead of dropping price to even pre-boom MSRP ranges

Comment has been collapsed.

1200 USD MSRP, this means it will be roughly 1800-2000 USD here.

Granted, I did not think there will be enough stupid people to buy the one-thousand dollar iPhone, but Apple's quarterly stats say that yes, there were plenty of those. So I guess Nvidia can get away with the same level of overpricing, since most of their returning user base is about the same when it comes to rationale.

Yep, still staying on AMD here, it seems. At least I can afford it.

Comment has been collapsed.

I have a iPhone X. Guess that makes me stupid then?

Comment has been collapsed.

Honestly, if you paid one thousand dollars to get a telephone that is marginally doing anything better than a 500-dollar one (minus the Dual-SIM aspect, since Apple apparently still haven't manage to invent that in the past decade), then yes, I guess it does. Especially considering with the coming 5G networks, it will get obsolete even faster than any previous high-grade iPhone models.

By the way, if you bought that upcoming 1k Samsung, I'd say the same. No phone can justify that price range, no hardware and use case like that exists. Even if you work all day on your phone, it does not bring the same amount of upgrade that its price point should justify.

Comment has been collapsed.

I can well justify my decision. I am really not in the mood to explain this in detail now, and I have done it here quite a few times, I believe. Yes, it is an expensive phone. But I got it a bit cheaper over the company I work in. And it's the device I am using all day, every day. I use it more than my PC (which was quite a bit more expensive). The OS alone is worth it to me. I really dislike Android. And there are things you actually will have a hard time finding a 500$ phone providing those. At the end of the day you should just acknowledge that different people value certain things differently. I can assure you I made an informed decision. And I would appreciate not being called stupid. It's truly amazing how often people get attacked or insulted just for the phone they prefer. You can have your opinion about certain devices. You can absolutely criticise them. But you should refrain from insulting the people who bought them, as this will rarely result in a fruitful discussion. I always liked you talgaby, but I also have to say your comments are often very negative, which I find is a shame. Attack the device, if you must, but not the people who own it. :)

Comment has been collapsed.

How one values a thing they use is subjective and we generally pay somewhat more for a device that suits the current long-lasting needs more.

I just cannot see this being the case with a telephone. Same way how I cannot see anyone realistically justify a 2500-dollar office laptop that has 10% better specs than a 1500-dollar one. (Both even have the same damn support plan attached.) There was just not enough difference between the X and the 8 models.

(Also, yes, Android is rather terrible. But at least it invented dual-SIM support, and I rely on dual SIM phones for work, so the entire Apple line is out of the question as far as I am concerned ever since my Lumia died on me.)

As for the calling. You are likely right. On the other hand, everyone makes stupid decisions. Human nature. And if we never point out when someone makes a stupid decision, then it can lead to worse situations than taking some pouting and saying it. Like my left knee reminds me every single day in the past 12 years.

Comment has been collapsed.

Gaming is now for rich people. I remember when the 8800 GTX retailed at launch at 599 and was considered "enthusiast" level. This is what happens in a monopoly market.

Besides, Raytracing will render 900 and 1000 cards obsolete so that players will be forced to upgrade if they want to keep playing at the highest settings. Great planned obsolescence at work. AMD please wake up, for fuck sake, we need a competitor.

Comment has been collapsed.

The funny thing is, even if AMD gets back in GPU "war" (which I doubt), most users will still purchase $$$vidia... Competition can lower prices, but once there is a competition, users just say "thank you AMD" and purchase G/RTX xx08TI, simply because this is the fastest card in the market.

We can see this trend with the CPU market, since the first Ryzen release, Intel had to ship two generations (77XX; 87XX) in the same year, now with the 9th gen, they will use solder thermal again (last time used with a 2nd gen) instead of magical "toothpaste" (no point to delid anymore?). But most users still look at Intel like the next World Wonder and praise them for innovations... Without Ryzen, we would get the same 4C/4T CPU with 5-10% increase from Intel, like the last 4 years (before Ryzen release).

Comment has been collapsed.

That's exactly what i'm hoping for. More competition, better solutions for everybody.

It's why i'm also hoping for Xbox to come back in full force, so that Sony will wake up and do something more interesting than another action-cinematic game.

Comment has been collapsed.

sorry but I disagree - for these reasons

1) personally this round i'm going for a Ryzen 2000

2) judging from the selling numbers AMD is getting a bigger share quarter after quarter

3) intel seems to launch CPU like crazy with not a real plan behind this

4) investors are betting on AMd now instead of Intel

all that summed up , I HOPE that AMD has a nice GPU architecture like the Ryzen is.

then we will have decent competition again

Comment has been collapsed.

Yep, over a few years AMD has done some serious damage in CPU market. They are increasing CPU market and finally can put some money in R&D budget (both CPU and GPU). Not sure about the investors, but AMD shares are pretty high ($20, compared to $1.8 which was 3 years ago), so yes, maybe you are right. However, according to a 2nd quarter financial results, Inter had ~6% revenue increase (compared to 2017) just from PC market, so they are still selling like crazy. On the other hand, Inter has the fastest GAMING CPU on the market.

But my point was, even if Ryzen is a very good product, most users simply look at clock speeds and brand name. Intel is the king in both areas. The same goes for Nvidia. The last time AMD had a superior product (if I remember correctly, it was in 2005-2006, HD68XX), even then sales weren't so good. It took almost a year till Nvidia responded. And we all know the rest...

The good thing is that AMD basically control console market (at least the current and next gen). Next gen consoles (both Sony and MS) will use Ryzen (CPU) + Navi (GPU). So there is still some hope that the competition will survive.

Comment has been collapsed.

Gaming is now for rich people. I remember when the 8800 GTX retailed at launch at 599 and was considered "enthusiast" level. This is what happens in a monopoly market.

Compared to the RTX 2080 for 699$ and considering inflation that's not even that different. I admit though, the 8800 Ultra was not as expensive as the 2080 Ti (it also was not nearly as much of an upgrade to the 8800 GTX as the 2080 Ti is to the 2080). If you want an enthusiast card, the 2080 is definitely one. Enthusiast level is not only the single most expensive card on the market. Second place counts as well. ;)

I had a 8800 GTX back then. It was truly a beast, for years. They didn't release a better card for quite a while (except the Ultra, which was basically the same card with higher clocks).

Comment has been collapsed.

But you could buy a 8800 GTX for the MSRP, now you have to take into account 10-20% extra for a card that costs $100 more at starting price in comparison.

Comment has been collapsed.

Sure, they definitely raised the prices over time. I don't want to defend that, I just wanted to put it in perspective a bit. This already happened with the last generation. I guess without real competition they can just do that. And as a customer that wants a high-end system you don't really have a choice.

Comment has been collapsed.

But now the 2080ti isn't the top card, the next Titan is.

Comment has been collapsed.

I know. But its not been announced yet. We dont even know if that will be aimed at prosumer or consumer market yet.

Its like saying the Titan wont be the top card, the next gen 2180ti will be.

You cant talk about cards not released or even announced yet.

I was just trying to say that splurging $1k on a GPU isn't a new thing. Yes, they have increased the price of the current generation GPU compared to the last generation, and I certainly wont be purchasing it at this new price, but its not a new thing. It has happened in the past.

Comment has been collapsed.

The thing is, AMD is not the good guy here. There are no good guys.

When AMD came up with Ryzen, everyone was so hyped with the performance and possibilities... But when the benchmarks came out, it all went out down the drain. Sure Ryzens are stronger for some specific applications, but when you look at gaming they simply provide performance like similarly priced Intel CPUs, so what's the difference?

Why should I bet on AMD, when they try to rip me off the same way Intel does?

Same goes with GPUs.

Sure AMD are going to release a new generation of cards, and everyone are going to talk about how cheap they are.

But then the benchmarks will come out, and we'll discover that in real world games, it performed the same as similarly priced Nvidia cards.

That's not competition.

That's a price-fixing cartel - https://en.wikipedia.org/wiki/Cartel?wprov=sfla1

Now the best bet we have for CPU competition is Qualcomm with their Snapdragon CPUs, which are going to have OSes supporting them soon.

And the best bet we have for GPU competition is Intel working on their GPU cards.

But knowing the market, and the players, they are not going to reduce prices and increase competition - but instead going to price the new products same as existing products with same performance, and try to maximize profits.

Because they don't care about reducing prices and winning over the market - they only care for selling as high prices as possible, and maximizing profits for every card sold.

Comment has been collapsed.

I disagree. While AMD products may not have had the top performance recently, they've often been a better value and absolutely made the landscape more competitive.

When Ryzen released it offered similar performance to Intel at a lower price and increased the core count by 50%. Sure, not everything could take advantage of the extra cores, but for those programs that could it was a huge boost. And given that games are increasingly becoming multi-threaded, it actually made Ryzen the better bet. As a result, Intel released a new i5 and i7 line that also had 6 cores at the 4-core price point, and I assure you that would not have happened had Ryzen not appeared - Intel would have just kept making insignificant improvements year after year while counting their money.

Likewise, AMD graphics cards have absolutely reduced the prices of Nvidia cards by offering better value. Remember how the Nvidia 10 series reference cards were supposed to carry a price premium over MSRP, but third-party cards would be priced lower at MSRP? Remember how that never happened, and instead third-party brands just set their pricing off of Nvidia's Founders Edition and ignored the MSRP entirely?

On release the RX 480 cards offered comparable performance to the GTX 1060 for ~20% less. I switched from Nvidia to AMD when I bought my RX 480 4GB on sale for $160, when the comparable GTX 1060 was $225+. Likewise, while Vega didn't blow away Nvidia, it did cause the GTX 1070 and 1080 to drop in price, and saw Nvidia release a 1070 Ti to compete. Of course, then mining blew all of that up - but before that AMD, was absolutely keeping Nvidia from inflating prices.

Nvidia responded to this by forcing its partners - Asus, MSI, Gigabyte, HP, Dell, etc - to make their gaming bands exclusively Nvidia's and to remove all AMD GPUs from their gaming brands. Nvidia now require anyone who wants to be part of the RTX launch to sign a 5-year NDA, and is not only giving their Add-In-Board partners (ASUS, MSI, Gigabyte, etc) a list of "approved reviewers" that they are allowed to send sample review cards to, but are also preventing their AIBs from distributing drivers with their review cards to force reviewers to sign the NDA in order to get drivers.

So while AMD may not necessarily be a "good guy", it seems that Nvidia is really trying hard to be the bad guy. And I say this as someone who's been using Nvidia cards exclusively for years, from the GTX 8800 to the GTX 670. They've recently become a brand that I no longer want to support. And don't even get me started on the huge up-charge for G-Sync.

Comment has been collapsed.

When Ryzen released it offered similar performance to Intel at a lower price and increased the core count by 50%. Sure, not everything could take advantage of the extra cores, but for those programs that could it was a huge boost. And given that games are increasingly becoming multi-threaded, it actually made Ryzen the better bet. As a result, Intel released a new i5 and i7 line that also had 6 cores at the 4-core price point, and I assure you that would not have happened had Ryzen not appeared - Intel would have just kept making insignificant improvements year after year while counting their money.

The thing is - that was all useless. For commercial use (complex calculations, graphical design, sound processing, etc.) Intel has already been releasing higher number of cores CPUs. And for home use - this is useless. It's a nice marketing trick to say: We now sell you 6 cores for the price we used to sell 4. But 2 additional cores don't give 50% more performance. Most PC applications (Email, Ofiice, Browser, etc.) only use 1/2 cores as is. The one really straining usage of home PCs is PC gaming.

But if you look at PC gaming performance comparison (even for games with official multi-core support & optimization) you will see that i3 & i5 CPUs (with 4 cores & 4 threads) consistently outperform i7 & i9 CPUs (with up to 10 cores & 20 threads):

https://www.pcgamer.com/best-cpu-for-gaming/ (benchmarks are at the bottom of the page).

So 6+ cores are basically useless in the only use case where they could actually make a difference (i.e. a person checking his email will not notice the difference between 4 cores & 12 cores). So it was all a PR stunt to get people to buy new CPUs (because they have MORE CORES), while in fact not providing any improvement over the old CPUs (with less cores).

Comment has been collapsed.

Are we looking at the same benchmarks? Because looking at the overall gaming benchmark you linked, the 4 core i5 processor is #8, with the 4 core i3 at #10. 14 of the top 16 processors are 6 cores or 8+ threads. 7 of the 8 processors they recommend as "Best CPU for Gaming" are 6 core. The 8th budget option is the only 4 core chip.

Now, obviously the benefit of 6 cores varies by game and engine, but many of the games where 4 cores shine are older (Witcher 3, Fallout 4, Rise of the Tomb Raider, and GTA V are all from 2015). And, obviously, 6 cores were very rare then, so they didn't scale the games and engines for more cores and threads. Now that 6 cores are much more common (Ryzen, new Intel i5 chips, PS4 and Xbox One), I expect that more and more new games will scale to 6+ cores.

Also, keep in mind that these benchmarks are normally done with nothing else running in the background. But that's not how I play. I have Chrome open in the background almost all the time. I have game launchers and controller software and AV and other stuff. Even if a game only scales to 4 cores, having an extra core to take care of all that background crap can still help.

Comment has been collapsed.

Are we looking at the same benchmarks? Because looking at the overall gaming benchmark you linked, the 4 core i5 processor is #8, with the 4 core i3 at #10. 14 of the top 16 processors are 6 cores or 8+ threads. 7 of the 8 processors they recommend as "Best CPU for Gaming" are 6 core. The 8th budget option is the only 4 core chip.

The first benchmarks are general ones, when you click left & right you see benchmarks received in specific games - take a look there.

Also, keep in mind that these benchmarks are normally done with nothing else running in the background. But that's not how I play. I have Chrome open in the background almost all the time. I have game launchers and controller software and AV and other stuff.

Doesn't matter - none of these are running while you play, they're mostly idling & using up memory/disk space.

Comment has been collapsed.

Taking a look at the individual benchmarks, I see that many older games from 2015 (Witcher 3, GTA V, Rise of the Tomb Raider, Fallout 4) don't scale past 4 cores / threads. Some newer games (The Division, Ghost Recon Wildlands, Sniper Elite 4, Shadow of War) care more about pure clock speed than having more than 4 cores / threads.

Ryzen is relatively recent, it was only released in March / April 2017.

Then there are a lot of newer games that absolutely scale past 4 - Ashes Escalation, Assassin's Creed Origins, Civilization VI, Dawn of War III, Hitman, Kingdom Come Deliverance. All of those benchmarks are dominated by 6 core / 8+ thread CPUs, and see slower Ryzen chips outperform faster 4-core Intel chips.

So obviously the benefit depends on what you're playing, but there are already a lot of games that take advantage of the extra cores. Given that more cores are becoming more common, and consoles have 6 cores, I would expect more games to scale to more cores / threads going forward.

Regardless, there are clearly already games that scale past 4 cores / threads. I think Ryzen is absolutely partially responsible for that. If AMD had not released an affordable 6 core chip, forcing Intel to do likewise, we'd never see games scale past 4 cores.

Comment has been collapsed.

I disagree. Let's look at specific examples (the ones you claim strengthen your point). For example Civilization VI:

The core i7-7800 performance is barely any better than i7-7700, despite having 6/12 cores/threads instead of 4/8.

Both are significantly better than i9-7900x, which has 10/20 cores/threads (roughly twice more than the i7 above). And it's even slighly worse than i5-8400 which only has 6/6 cores/threads.

If we look at Dawn of War III for example, there is an even more absurd situation, where an i3-8100, with 4/4 cores/threads, and a much lower processors line (i3 is almost the minimal processor sold) and is sold for ~$100 beats i9-7980XE which has a whooping 18/36 cores/threads and is sold for almost $2000.

So even if 6 cores can give better performance than 4 cores (in a small selection of games), the number of cores is still useless as soon as we pass the 6 cores. The clock speed of a single core seems much more significant in the overall gaming performance.

Comment has been collapsed.

Trying? Just the list here shows that they stopped trying almost a decade ago and went full-blown supervillain: https://www.reddit.com/r/Amd/wiki/sabotage

The Nvidia vs AMD struggle is like watching a cartoon villain bully a mentally handicapped.

Comment has been collapsed.

2080 costs 1150$ here. I really wanted to buy a 2080 but I can't afford it at all.

260$ minimum wage/month, i can find a job with 400$/month at best. So I have to work for at least 3 months for a god damn computer part. F**k this country.

Comment has been collapsed.

Wait a month or so then, before the lira will start freefalling again. Then this price will seem great in comparison.

At any rate, probably still better off than a Brazilian. With their electronics import tax, I guess a base 2080 will be around 2000–2200 dollars?

Comment has been collapsed.

MSRP 1080 ti vs MSRP 2080 ti = +300 USD

are they CRAZY ?!?!

for +100 MSRP I could have started the saving...with these prices, I keep my GTX !!

Comment has been collapsed.

It's actually $500 more if you look at the founders edition. The 1080 ti was $699 and the 2080 ti is $1199.

Comment has been collapsed.

But the 1080Ti FE was also 100$ more expensive, if I remember correctly?

Comment has been collapsed.

Not sure, Wikipedia lists the MSRP and FE as $699 for the 1080 ti.

Comment has been collapsed.

Hm, I am almost certain the Ti FE was more expensive, just like the other ones.

Comment has been collapsed.

Maybe it would be worth it just to get a 580 and hold out for a couple years?

Comment has been collapsed.

The 580 is really old by now. Just doesn't hold up anymore. I am sure you can find better cards for that money. For instance, used 970s should be quite cheap now, I assume.

Comment has been collapsed.

Which companies transfer warranty? EVGA right?

I won't buy used without any warranty I think. Had too many of the things die on me.

Comment has been collapsed.

Yeah, I believe that's EVGA, and they give 5 years (better look that up first). I bought quite a few used cards in the past. Must have been lucky, they all worked flawlessly until I sold them again.

Comment has been collapsed.

3 years only for a good while. Seller store may offer some more, but this is their best factory warranty

Also, the transferred warranty gets voided if the card was overclocked. Nice little legal hole there—granted, not everyone OC's their cards.

Comment has been collapsed.

It had been 2 years since Pascal launched, which is basically the longest nvidia has ever gone without a new line of GPUs. You shouldn't have believed them when it was such obvious bs. That said, the 1080ti is a great card that I'm sure will last you for a long time. Even if you really want raytracing, the 2000 series is the first generation of the tech and so is probably going to only be used in a very limited number of titles before the 3000 series releases (probably in another 1.5-2.5 years).

Comment has been collapsed.

There have been talks about new cards much longer than that, though until this one they'd never turned out to be true.

I guess I took an official word from nvidia with a heavier weight than all the random rumors that had been talking about new cards releasing earlier in the year (and even last year) but I suppose I won't be making that mistake again.

Comment has been collapsed.

Or you got "lucky", won't know much until reviews come out, but i still need a new card (running on onboard graphics, coffee lake 8700k now) and i think i will go for 1080 ti and just skip this one and wait till the next generation, because of the 7nm that it would be more worth it and the differences between 1080 ti and 2080 ti too minimal.

Comment has been collapsed.

You may be right! Time will tell on that, I suppose. Either way I'll force myself to not upgrade again for at least a couple more years. Next time I'll try to time it better.

If that does end up being the case now might be the better time to pick up a 1080 TI if the newer cards end up not being worth it. There have been a good number of 1080 TI sales lately but if it turns out less people want the new generation those will probably stop being so frequent.

Comment has been collapsed.

I'm not gonna pay 1.8k+ EUR each year just because my previously top-of-the-line GPU is suddenly not the best. Instead of having great improvements in technology, everything is happening in small iterations and for premium prices because the market allows it. Why give everything at once when you can milk it over the course of several years by starting with a basic plan and going up in small increments? That's exactly what's happening right now and this is partly due to a lack of strong competition.

Comment has been collapsed.

That was basically Intel's plan until AMD released their Ryzen CPUs. So i'm hoping that the same (from either AMD or Intel i don't care) will happen for GPUs.

Comment has been collapsed.

They hardly have any competition for the top-of-the-line segment right now so it'll be a difficult task to accomplish for the other industry players.

Comment has been collapsed.

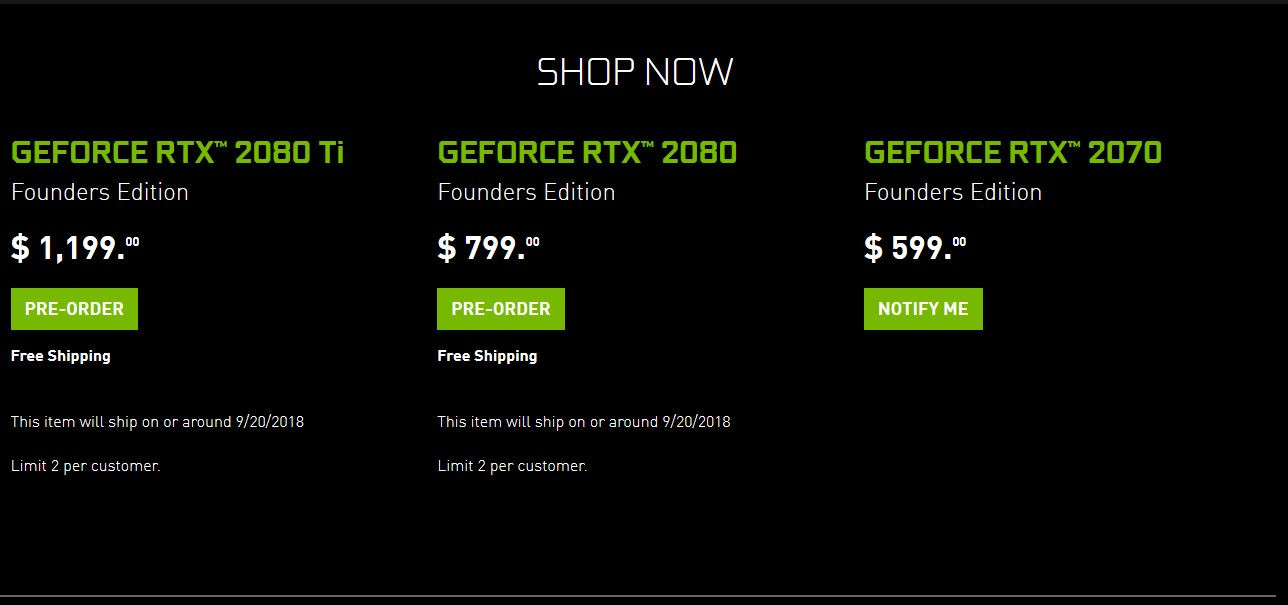

I hate how they quote 3rd party manufacturer MSRP and then sell the cards for way more money. They are actually selling from Nvidia for $600, $800, and $1200. That's insane. The 1080 ti went from $699 to $1199 and the 1070 went from $450 to $599. I was thinking of getting a 2060, but at these prices I will probably just buy a used older generation card like I usually do.

I guess that is what happens when there is no competition. I can't wait for Intel to start releasing GPUs. I really hope they can put out cards on par with Nvidia and create some competition again because these price increases are getting ridiculous.

Comment has been collapsed.

I don't care what they have done in the past. They are planning on releasing video cards in 2020 and I'm sure they have been studying the competition for a long time and know what they are getting into. I think they will probably do better than AMD is currently doing.

Comment has been collapsed.

I just meant that they have plans for 2020 which is the future and they are trying something new. I meant past as in anything they have already done. I think they recently hired 2 very experienced people to help design the GPUs.

Comment has been collapsed.

We'll see. If their driver suite remains the way it is now, I fear that even making the best GPU on the planet won't help them much. Still, that is far away.

Plus I am not sure that if the biggest CPU manufacturer that holds a quasi-monopoly tries to ruin the sandbox of the quasi-monopoly that holds the GPU segment will be an actual good thing, since it can lead to, well, an overall monopoly on processing units. Unless ARM chips really make a miracle and start being viable in desktop and server usage. Which they are light years away from now.

Comment has been collapsed.

The thing is, why do you thing Intel wants to release GPUs? Why now?

It's BECAUSE the 1080 ti costs $1200.

Itel don't care about competition, they care about the free GPU money people are throwing around.

Intel will release their own 1080 TI, and charge you $1200 for it...

Comment has been collapsed.

I don't think so. The only reason prices are so high right now is because AMD hasn't done anything that great in a long time and Nvidia has been in a league of their own for a while. They have no competition, so there is no push for them to release new technology and they can charge whatever they want. Just look at what Ryzen and Threadripper are doing to Intel. Intel use to be where Nvidia is now, but AMD is making them rush products out and taking a chunk of their market share.

Intel has been working on making GPUs for a long time, it's not something you get into overnight because the prices recently went up. They actually had plans to release GPUs back in 2010 called Larrabee, but things didn't work out. They recently have been hiring some very well known people with great track records in the industry.

Competition will always drive innovation, lower prices, and be better for the customer, that's just how things work. With competition, the companies work for us, they have to try and make something better than the competitor and sell it at a reasonable price. Without competition, things stagnate and they can release products with minimal improvements on as long of a time schedule as they want and charge through the roof.

Comment has been collapsed.

I already wrote something similar elsewhere in this thread:

https://www.steamgifts.com/discussion/z7b9x/nvidia-announces-geforce-rtx-2080-and-rtx-2080-ti#u5H0IFb

There's a reason Intel didn't release their GPUs in 2010, but are releasing them now.

In 2010 the profit margins for each GPU card were much lower...

Comment has been collapsed.

Ugh, they went with the 2000 naming convention instead of 1100. I was hoping they'd be sensible about it so we could have a consistent numbering scheme for decades to come.

Still, the fact that they didn't change the amount of VRAM in the cards means it's going to be safer to stay with Pascal for a bit longer.

Comment has been collapsed.

I do agree, I'd rather it was 1180 as well but I can guess why. 1080 & 1180 look very similar at a glance, compared to 780, 880, 980 & 1080. While 2080 will be easier to distinguish for now, they're going to have the same problem if they ever get to 10,080.

Though I suppose it'll take them long enough to release 8 new generations of cards that they can start over again with the BFC 180 or something :)

Comment has been collapsed.

They chose it such that it makes the previous generation look completely obsolete. It's nothing more that a marketing scheme designed to sell more units.

Comment has been collapsed.

Agreed

all those figures from the keynote are only about raytrace...nothing about pure FPS in Witcher 3, GTAV, Origins, HItman and CURRENT games

Comment has been collapsed.

They did state that the infiltrator demo on 1080ti had 72 FPS compared to sub 30 on 1080Ti?

This was mainly due to their deep learning based AA, but its still relevant enough to anyone who plays games with AA enabled.

That said, yes, I would still wait for reviews.

Comment has been collapsed.

This is what happens when a company has no competition. These are Nvidia launch prices. Each line shows the last 3 generations of the same model (70, 80, 80 ti, Titan). Maybe Intel will release their GPUs in 2020 and be competitive enough to put an end the these ridiculous prices because it doesn't look like AMD is doing much.

(970 - $330) (1070 - $450) (2070 - $600)

(980 - $550) (1080 - $700) (2080 - $800)

(980 ti - $650) (1080 ti - $700) (2080 ti - 1200)

(Titan X - $1000) (Titan Xp - $1200) (Titan V - $3000)

Comment has been collapsed.

Yea GPU prices have become ridiculous.

I bought a Geforce 9800 GTX just 10 years ago for like $300-350. It was the best consumer grade GPU available at the time. Now the equivalent (2080ti) cost about 4x as much.

Comment has been collapsed.

You've quoted the Founder's Edition prices. That's just what nVidia will be selling them for.

Third parties (Asus, MSI, EVGA, etc.) will be basing their pricing on the "reference" prices, and adjusting for overclocking, aftermarket coolers, etc.

Pricing shakes out as follows:

GeForce RTX 2080 Ti Founders Edition: $1,199

GeForce RTX 2080 Ti Reference: $999

GeForce RTX 2080 Founders Edition: $799

GeForce RTX 2080 Reference: $699

GeForce RTX 2070 Founders Edition: $599

GeForce RTX 2070 Reference: $499

https://www.pcgamer.com/nvidia-rtx-2080-graphics-card-release-date/

Comment has been collapsed.

I'll believe it when I see it. I quoted Nvidia prices for a reason, because that is actually what the cards sell for. Nvidia pulled this BS marketing scheme with the 10 series as well and the 3rd party manufacturers weren't selling at MSRP. Actually many of them sold for over the FE price and we can already see that happening with 3rd party pricing on the 20 series.

I have a feeling the 20 series will eventually come down to the MSRP price at some point this generation though because these prices are outlandishly high and because of that, they may not sell as many as they are hoping.

Comment has been collapsed.

I'm just giving you supplementary information. Whether or not you "believe it" is entirely up to you.

EDIT: I just checked, and I only paid $440 for my first EVGA GTX 1070 SSC a month after release, and only that late because they were out of stock until then. ACX 3.0 coolers and overclocked out of the box. The GTX 1070 reference card was released on June 10th, my purchase was made on July 8th.

Comment has been collapsed.

Only paid $440? You just proved my point. Nvidia claimed a 1070 MSRP of $379 and you paid $440.

Edit: Also, I think the SSC is one of the cheaper cards from EVGA, they probably sell another 5 or 6 cards that are more expensive and I bet they were all priced over the $449 that the FE was quoted at and way over the 3rd party MSRP of $379. A very large majority of people that buy Nvidia cards are going to buy a card that costs as much if not more than the FE price, but Nvidia tries to say they will be cheaper. There are very few cards that are cheaper, it is just BS marketing and they know it.

Comment has been collapsed.

1) It was EVGA - they tend to charge more for their warranty coverage.

2) It was overclocked out of the box, instantly raising the price another $20.

3) ACX 3.0 coolers, by far better than reference blowers.

4) Custom PCB and power delivery.

In other words, my cards are far from the reference design. The standard version of the same card (meaning: not overclocked) at that time was $420, and both are lower than the $450 you're stating.

Comment has been collapsed.

You are not making sense. I am saying that the cards do not sell for the low 3rd party MSRP that Nvidia quotes and you say you bought one for $60 more than MSRP. EVGA has 13 1070s on their site right now, they are selling from $489 to $579. Even the simple blower style card is $499. It is over 2 years after the cards launched and the next generation has now launched, but the GPUs are still well over the MSRP that Nvidia quoted. These cards should be reduced below MSRP at this point. The Nvidia MSRP is marketing BS and the quoted prices should be ignored. The price of the cards are what they are actually selling for, not some made up number that almost nobody pays.

Comment has been collapsed.

I have no idea why their prices are so high right now. I'm telling you what I paid for it less than a month after release from Newegg. As I stated, it would have been a day one purchase, except they were out of stock (for me, who received constant emails they were in-stock while I was at work and unable to order) until July 8th.

I'm also telling you why I paid extra - for the overclock, the aftermarket cooler, and the extra distance EVGA goes with their warranty and RMA service. And then I'm telling you that it's still less than the $450 you're stating.

This is the exact card I purchased, and here is a screenshot of the invoice from July 8th, 2016. Edit: For reference, here is the "reference" GTX 1070. I think my cards were worth the extra dosh.

Comment has been collapsed.

And what I'm saying is that pretty much every 3rd party card comes with a better cooler, is overclocked, and costs over the Nvidia stated MSRP, just like the one you bought. Even the reference models are usually above the MSRP. The stated MSRP prices don't mean anything if companies aren't selling them for that cheap. They quote artificially low prices to make the cards sound like a better deal, but then you can't actually find them for that price.

Comment has been collapsed.

And what I'm saying is that pretty much every 3rd party card comes with a better cooler, is overclocked, and costs over the Nvidia stated MSRP, just like the one you bought.

And you've also just stated why they cost over nVidia's MSRP - a better cooler and overclocked.

Have you used a reference card? They're horrendously noisy under load. The cooler alone is worth the extra $40 on the non-SC version of my cards. And then I paid an extra $20 for the overclock (and have overclocked it much higher than that, actually).

Just an afterthought, but those newer coolers and chip tech also saved me another $150 per card for water-blocks. Been running them on air, and they're damned near dead silent under load.

Comment has been collapsed.

They are not that expensive compared to the other cards that EVGA sells. Also, almost every 3rd party card is overclocked and I don't think they are cherry picked. EVGA has more expensive liquid cooled and FTW cards where they would use the cherry picked chips.

Comment has been collapsed.

I'm giving you information, and you're just rebutting it with opinion. I am telling you, the SC cards were $20 more expensive than the non-SC version. Period.

And EVGA has been cherry-picking cards for their over-clocked series since the 8000's

https://www.theinquirer.net/inquirer/news/1000432/inq-takes-dive-black-pearls

Every Nvidia 8800GTX board that comes into the office finds itself placed on a testbed and submitted to torture testing at the different clocks, which form EVGA's line-up. The clocks are 621/2000 for the SuperClocked variant and 626/2000 for the ACS3 or the Black Pearl one.

Comment has been collapsed.

【Steam】Kitchen Wars|Free until Aug 04 17:00 UTC

12 Comments - Last post 12 minutes ago by Bomfist

【Steam】DRACOMATON|Free until Aug 04 17:00 UTC

16 Comments - Last post 12 minutes ago by Bomfist

[Humble Bundle] July 2025 Choice (#68) 🐶

314 Comments - Last post 38 minutes ago by OwieczkaDollyv21

Where are those giveaways coming from?

17,240 Comments - Last post 42 minutes ago by Xarliellon

【🎉 Gamescom 2025】epix Rewards|250802 - Day 2❗2 ...

86 Comments - Last post 1 hour ago by VicViperV

【GOG】Freedom to buy games|Free until Aug 04 00:...

154 Comments - Last post 2 hours ago by Kanephren

[Humble Bundle] Better with 4 friends! Co-op bu...

13 Comments - Last post 6 hours ago by PTTsX

Steam is apparently meant to be viewed at 110% ...

9 Comments - Last post 27 minutes ago by Matwyn

SteamTrades down?

13 Comments - Last post 51 minutes ago by CRAZY463708

Tiny Train | Adding Steam Friends | What can I ...

57 Comments - Last post 54 minutes ago by Protomartyr

Happy 8th CakeDay Special [Level 3] (EDIT: Tick...

195 Comments - Last post 1 hour ago by OneNonLy

[OPEN] 🐒 Ace Ventura Giveaways 🐒 [140/180]

299 Comments - Last post 1 hour ago by Ev4Gr33n

Positive thread! (Giveaways!)

11,463 Comments - Last post 1 hour ago by quinnix

Official last movie you saw thread

10,340 Comments - Last post 1 hour ago by CurryKingWurst

Didn't find anything here about it, so here you go. This will change a little game graphics.

Edit:

Here are some demos for the RTX along with some new trailers for upcoming games.

According to Nvidia this is the result of 10 years of work, trial and error, so this is just the start of companies embracing the new tech. For now RTX cards are expensive, but in the fast evolving world of technology, arguably it will be soon overshadowed by the 3080 or, why not, some new AMD cards, for the sake of competition.

Comment has been collapsed.